Artificial Intelligence is reshaping modern software development. For engineering teams, frontend developers, and tech leads, implementing machine learning is no longer optional—it's essential for building competitive applications. This guide gives you the skills to go from being curious about the subject to confidently building with it.

This guide provides everything you need to know about how to train an AI model, transforming you from a curious developer into a confident AI practitioner. We'll walk you through the entire process, from foundational concepts to advanced deployment strategies, ensuring you have the practical insights to innovate and lead.

TL;DR: How to Train an AI Model

To train an AI model, you typically follow five key steps:

Collect and preprocess data – clean, normalize, and label your dataset.

Choose an algorithm or framework – e.g., TensorFlow, PyTorch, or scikit-learn.

Train the model – feed data into the algorithm and adjust weights.

Evaluate performance – test with unseen data to measure accuracy.

Deploy and monitor – integrate into an application and retrain as needed.

Tip: Start small with open datasets and simple models before scaling to complex architectures.

What is AI Model Training?

AI model training is the process of feeding a machine learning algorithm data to help it identify patterns, make predictions, and learn. The term 'machine learning algorithm' can broadly refer to the model itself, the training process, or the combination of both. The goal is to create a model that can perform a specific task, like classifying images or translating text, without being explicitly programmed for every scenario.

AI models and their applications.

AI models are the engines of modern intelligent applications. They are used across countless industries to power features that feel like magic. Recent research highlights their expanding footprint; a 2025 report from Stanford's HAI shows that 78% of organizations reported using AI in 2024, a significant jump from 55% the previous year.

Importance of data in training an AI model.

Data is the lifeblood of any AI model. The quality, quantity, and relevance of your training data directly determine your model's performance. As a 2025 analysis by Lumina Datamatics emphasizes, high-quality training data is the backbone of machine learning success, making data preparation a critical, strategic function.

A prominent instance of this principle was Amazon's experimental recruiting tool. Because the system was trained on a decade of the company's resumes, which came predominantly from men, the model taught itself to penalize applicants for gender-specific phrases, such as "women's chess club captain." This case demonstrates that a model trained on biased data will produce biased and flawed results, making conscientious data preparation a critical, strategic function.

Common use cases for AI models (image, video, text, voice, etc.).

Image: Object detection in self-driving cars, facial recognition, and medical imaging analysis.

Video: Action recognition in security footage and content moderation on social media platforms.

Text: Sentiment analysis in customer feedback, language translation, and AI chatbots.

Voice: Voice assistants like Siri and Alexa, and real-time speech-to-text transcription.

Types of AI Models

Your approach to training will depend on the type of problem you're solving. AI models generally fall into three categories.

1) Supervised Learning: This is the most common type. You train the model on a labeled dataset, meaning each piece of data is tagged with the correct output. The model learns to map input to output.

Examples: Convolutional Neural Networks (CNNs) for image classification, Decision Trees for predictive modeling.

2) Unsupervised Learning: Here, the model works with unlabeled data and tries to find patterns and relationships on its own.

Examples: Generative Adversarial Networks (GANs) for creating synthetic data, K-Means Clustering for customer segmentation.

3) Reinforcement Learning: The model learns by interacting with an environment. It receives rewards or penalties for its actions, learning to maximize its reward over time.

Examples: Training agents for games (like AlphaGo) or robotic control systems.

What Do You Need to Train an AI Model

Before you begin, you need to assemble the right toolkit. This includes the necessary hardware, software, and, of course, data.

Hardware Requirements

Training complex AI models is computationally intensive. While you can train simple models on a standard laptop, deep learning tasks require specialized hardware.

The importance of high-performance GPUs and TPUs: Graphics Processing Units (GPUs) from NVIDIA (like the A100 or H100) and Google's Tensor Processing Units (TPUs) are designed to handle the parallel computations required for deep learning, drastically reducing training times.

Best laptops for training AI models: For local development, look for laptops with modern NVIDIA RTX series GPUs, such as the RTX 4070 or higher, and at least 16-32GB of RAM.

Software and Libraries

The AI ecosystem is rich with powerful, open-source tools that simplify the process of building and training models.

Key libraries: TensorFlow, Keras, PyTorch, Scikit-learn, etc.:

TensorFlow: Developed by Google, it's known for its production-ready ecosystem (TFX, TensorFlow Lite) and scalability. It holds a significant mindshare in enterprise AI.

PyTorch: Developed by Meta AI, it's celebrated for its Python-native feel and flexibility, making it a favorite in the research community. A 2025 analysis shows PyTorch is used in around 85% of deep learning research papers.

Keras: A high-level API that can run on top of TensorFlow, making it incredibly user-friendly for building and iterating on models quickly.

Scikit-learn: The go-to library for traditional machine learning algorithms, perfect for tasks that don't require deep learning.

Overview of AI development platforms (Google Cloud Vertex AI, Azure AI, etc.): Cloud platforms offer managed services that handle infrastructure, allowing you to focus on model development. They provide access to powerful hardware, pre-configured environments, and MLOps tools for managing the entire lifecycle.

Data Requirements

Data is the cornerstone of your project. The success of your model hinges on the quality and quantity of the data you use.

How much data is needed to train an AI model? This is a common question, and the answer is: it depends. The complexity of the task is a major factor. Simple classification might work with a few thousand examples, while training a large language model requires billions of data points.

Types of data for different models (images, videos, text, etc.): The data you collect must match your model's objective. For an image classifier, you need labeled images; for a sentiment analyzer, you need labeled text.

Data preprocessing: Cleaning, normalization, and augmentation: Raw data is almost never ready for training. Preprocessing is a critical step that involves:

Cleaning: Removing duplicates, correcting errors, and handling missing values.

Normalization: Scaling numerical data to a standard range (e.g., 0 to 1) to help the model converge faster.

Augmentation: Artificially expanding your dataset by creating modified copies of existing data (e.g., rotating or flipping images).

How To Train An AI Model? (7-Step Process)

With the prerequisites in place, we can now dive into the practical steps of how to train an AI model. This structured approach will guide you from a problem statement to a deployed solution:

Step 1: Define the Problem

First, clearly articulate what you want your AI model to achieve. Are you building an image recognition tool, a speech-to-text engine, or a recommendation system?

Understand the desired output and the performance metrics you'll use to measure success. A clear objective will guide every subsequent decision.

Step 2: Gather and Prepare Data

This step is often the most time-consuming but also the most critical. You need to collect a high-quality dataset that is relevant to your problem.

For supervised learning, you'll need to label or annotate your data, which means tagging it with the correct answers. For example, in a dataset for a cat vs. dog classifier, each image must be labeled "cat" or "dog." Handling unstructured data like text or images often requires significant effort to structure it for the model. As discussed on Reddit, this process is foundational to teaching an AI.

Step 3: Choose the Right Model

Selecting the right model architecture is key. Your choice will depend on your task and the type of data you have.

CNNs are the standard for image-related tasks.

Recurrent Neural Networks (RNNs) or Transformers are ideal for sequential data like text or time series.

Specialized models like Stable Diffusion (for image generation) or BERT (for NLP tasks) can be fine-tuned for specific applications.

Step 4: Split the Data for Training and Testing

You can't use the same data to train your model and test its performance. Doing so would be like giving a student the answers to an exam before they take it. You need to split your dataset into three parts:

Training Set: The largest portion, used to train the model.

Validation Set: Used to tune the model's hyperparameters during training.

Test Set: Used for the final evaluation of the model's performance on unseen data.

A common split is 70% for training, 15% for validation, and 15% for testing.

Step 5: Train the Model

This is where the learning happens. You'll feed the training data to your model and let it adjust its internal parameters (weights) to minimize the difference between its predictions and the actual labels.

You'll configure hyperparameters like the learning rate, batch size, and the number of epochs (passes through the training data).

Example: How to train an AI model with Python

Here’s a simplified Python example using Keras to train a simple image classifier:

import tensorflow as tf |

For larger datasets that do not fit into memory, using the tf.data API is advisable for building efficient data pipelines. This process of how to train an AI model can be greatly simplified using cloud platforms.

For a practical look at this process, the following video provides an excellent overview of training a specialized model on Google's Vertex AI.

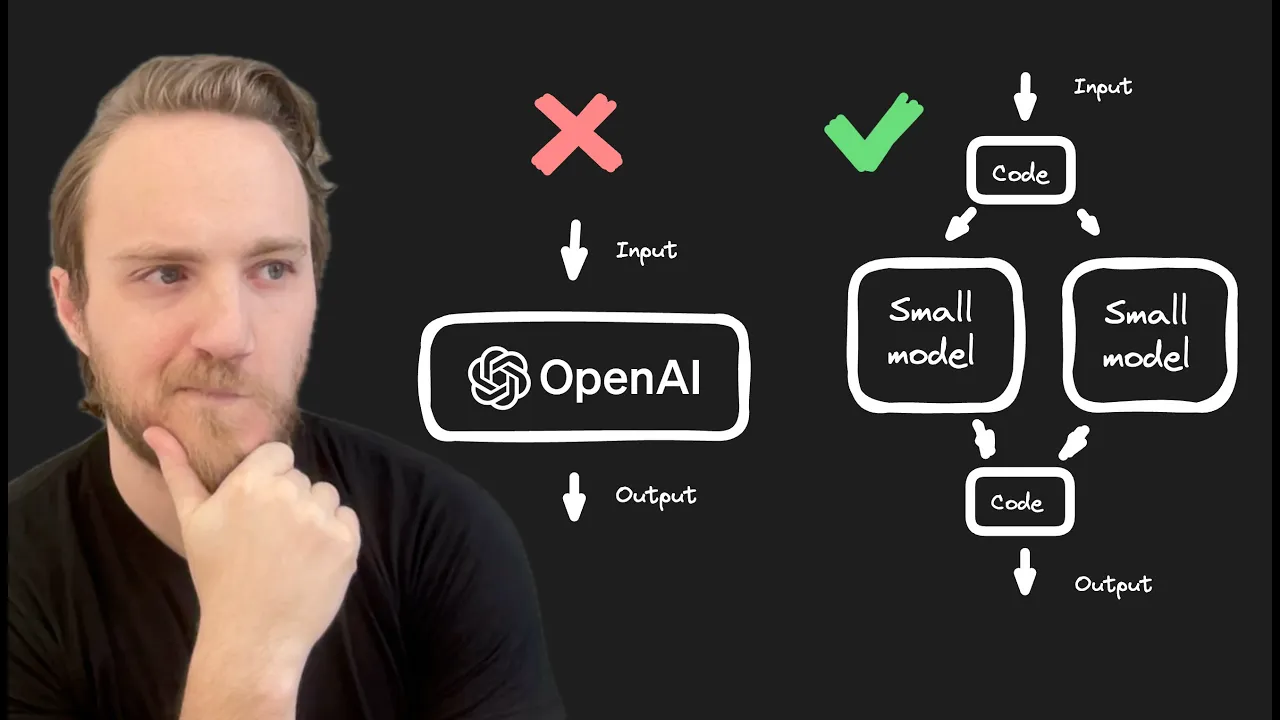

The presenter demonstrates how breaking down a complex problem, leveraging plain code where possible, and using cloud tools for heavy lifting can lead to faster, cheaper, and more reliable results than using general-purpose LLMs. Key takeaways include generating quality data, using built-in cloud tooling for training and deployment, and combining specialized models with traditional code for a robust solution.

Step 6: Evaluate and Optimize the Model

Once training is complete, you must evaluate your model's performance on the test set. Key metrics include:

Accuracy: The percentage of correct predictions.

Precision: The proportion of positive identifications that were actually correct.

Recall: The proportion of actual positives that were identified correctly.

F1 Score: The harmonic mean of precision and recall.

If performance is not satisfactory, you'll need to iterate. This involves tuning hyperparameters, trying a different model architecture, or gathering more/better data.

Step 7: Deployment and Monitoring

Once your model is trained, it's time to bring it to life in a production environment. This could involve deploying it on a local server, a cloud platform like AWS or Google Cloud, or directly onto an edge device for real-time processing.

A great way to get started, especially for rapid prototyping, is to leverage the vast collection of pre-trained open-source models available on platforms like Hugging Face. This approach can significantly speed up your development process, as you can build upon existing state-of-the-art models rather than starting from scratch.

For creating interactive web applications for your models, tools like Streamlit offer a straightforward way to build and share data apps with minimal effort. This allows users to easily interact with your model without needing to be experts in the underlying code.

After deployment, the work isn't over. It's crucial to continuously monitor your model's performance on new, real-world data. This helps you detect and address model drift, a phenomenon where the model's accuracy degrades over time as the data it encounters in production differs from the data it was trained on. Regular monitoring ensures your model remains effective and reliable.

What Are The Types of AI Models You Can Train?

Let's explore some specific types of models and what it takes to train them. This is where you can truly appreciate the versatility of knowing how to train an AI model.

1) AI Image Model

Training an AI image model typically involves tasks like image classification (what is in the image?) or object detection (where are the objects in the image?). Frameworks like TensorFlow and PyTorch, with their high-level APIs like Keras, provide pre-built layers and tools specifically for handling image data.

2) AI Video Model

Training on video data is more complex than images because it introduces a temporal dimension. You need to analyze sequences of frames to understand actions or events. This requires more significant computational resources and specialized architectures like 3D CNNs or a combination of CNNs and RNNs.

3) AI Voice Model

Want to know how to train an AI model to understand your voice? This involves training a speech-to-text model. The process requires large datasets of audio clips paired with their corresponding transcripts. You can even fine-tune existing models to create a system that talks like you.

This video provides a comprehensive workflow for building production-level machine learning models, which is highly relevant for speech and other data types. It emphasizes a structured pipeline:

Data cleaning (removing duplicates and corrupted data)

Transformation (encoding data numerically)

Pre-processing (scaling features to speed up training)

And proper data splitting

It also covers hyperparameter tuning, model selection, and the importance of choosing the right evaluation metrics, offering a robust framework for any serious ML project.

4) AI Chat Model

Training an AI chatbot involves using Large Language Models (LLMs) like GPT or BERT. While training a foundational model from scratch is beyond the scope of most teams, you can fine-tune a pre-trained LLM on your own data (e.g., company documents or support tickets) to create a chatbot that understands your specific domain. This is a powerful way to build custom conversational AI experiences.

Training AI Models Locally vs. on the Cloud

A key decision in your journey of learning how to train an AI model is where you'll do the actual training.

Feature | Training Locally | Training on the Cloud |

Pros | ✅ Full control over hardware/software | ✅ Access to state-of-the-art hardware (GPUs/TPUs) on demand |

Cons | ❌ Significant upfront investment in hardware | ❌ Costs can escalate quickly if not managed |

Pros and Cons of Training Locally

Pros: Full control over your hardware and software stack; potentially lower cost for frequent, smaller experiments if you already own the hardware.

Cons: Significant upfront investment in powerful GPUs; responsibility for maintenance, setup, and troubleshooting; limited scalability. A discussion on the Level1Techs forum highlights the complexities and potential pitfalls of setting up a local training environment.

Pros and Cons of Cloud Training

Pros: Access to state-of-the-art hardware (GPUs, TPUs) on demand; pay-as-you-go pricing model; scalability to handle massive datasets and models; managed services that simplify MLOps.

Cons: Costs can escalate quickly if not managed carefully; potential data privacy concerns for sensitive information; less control over the underlying infrastructure. A 2025 report on AI development costs shows that cloud compute costs can range from $500 to over $10,000 per month depending on usage.

How Much Data Do You Need to Train an AI Model?

This is one of the most pressing questions for anyone learning how to train an AI model. The answer involves a trade-off between quantity and quality.

Data Quantity vs. Data Quality

While more data is generally better, data quality is far more important. A small, clean, well-labeled dataset will produce a better model than a massive, noisy, and poorly labeled one. As one Reddit thread discusses, starting with a focused, high-quality dataset is often the best approach.

Data Augmentation Techniques

What if you have a small dataset? You can use data augmentation to artificially increase its size. For images, this involves techniques like:

Rotating

Cropping

Flipping

Changing brightness or contrast

These techniques create new training examples, helping the model become more robust and less prone to overfitting.

What Are The Common Mistakes to Avoid When Training an AI Model?

The path to a production-ready model is fraught with potential pitfalls. Here are some common mistakes to avoid:

Overfitting vs. Underfitting: Overfitting is when your model learns the training data too well, including its noise, and performs poorly on new data. Underfitting is when the model is too simple to capture the underlying patterns.

Not preparing or cleaning the data correctly: Garbage in, garbage out. Data errors will directly lead to a poorly performing model.

Using incorrect evaluation metrics: Using accuracy on an imbalanced dataset, for example, can be highly misleading.

Not experimenting with different algorithms or architectures: There is no one-size-fits-all model. It's crucial to experiment to find the best approach for your specific problem.

Conclusion

Understanding how to train an AI model is no longer optional for ambitious developers and engineering teams; it's essential. From defining a problem and preparing data to training, evaluating, and deploying a model, the process is a systematic journey that combines data science, software engineering, and domain expertise.

By leveraging powerful frameworks like TensorFlow and PyTorch, utilizing cloud platforms for scalability, and focusing relentlessly on data quality, you can build intelligent, production-ready applications that define the future.

We encourage you to start your journey today. Begin with a small project, experiment with different models, and embrace the iterative process of optimization. The resources and knowledge are more accessible than ever, and the potential to create transformative technology is in your hands.

FAQs on Training AI Models

1) Can I train my own AI model?

Yes! With the right tools, a quality dataset, and a clear objective, you can train your own custom AI model from scratch or, more commonly, fine-tune an existing pre-trained model for your specific needs.

2) How are AI models trained?

AI models are trained by feeding them large amounts of data. During this process, an optimization algorithm adjusts the model's internal parameters to minimize the error between its predictions and the actual ground truth, effectively teaching it to recognize patterns.

3) What is the best way to train an AI?

The best way depends entirely on your project's scope, data availability, and resources. Cloud platforms like Google Vertex AI or Azure ML are often best for large-scale tasks, while local training can be efficient for smaller experiments.

4) Can you train your own OpenAI model?

You cannot train OpenAI's foundational models like GPT-4 from scratch. However, OpenAI provides an API that allows you to fine-tune their models on your own data, enabling you to create specialized versions for your specific use cases.

5) How much data do I need to train an AI model?

It depends on the complexity of the model. Simple classification tasks may only require a few thousand samples, while deep learning models often need millions. Using pre-trained models and transfer learning can significantly reduce data requirements.

6) What hardware do I need to train AI models?

Small models can be trained on a laptop CPU, but for deep learning tasks, GPUs (like NVIDIA RTX series) or cloud-based compute services (AWS, Google Cloud, Azure) are recommended for faster training.

7) What are common mistakes when training an AI model?

Using unclean or biased data.

Overfitting (model performs well on training data but poorly on new data).

Skipping proper evaluation metrics.

Ignoring ethical concerns like bias and fairness.

8) Can I train an AI model without coding?

Yes, there are low-code and no-code platforms like Google AutoML, Microsoft Azure ML Studio, and RunwayML that let you train models with minimal programming knowledge.

9) How long does it take to train an AI model?

Training time varies from minutes (for small models on local machines) to days or weeks (for large neural networks on specialized hardware). Factors include dataset size, algorithm complexity, and available compute power.